Executive Summary

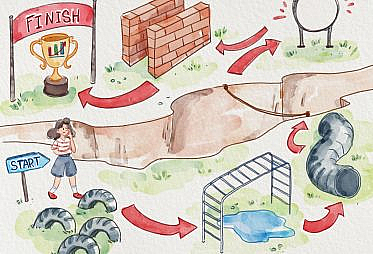

We want our work to be impactful and used by stakeholders; we keep hearing that developing data products is the answer. Here’s a step-by-step guide on how to get there.

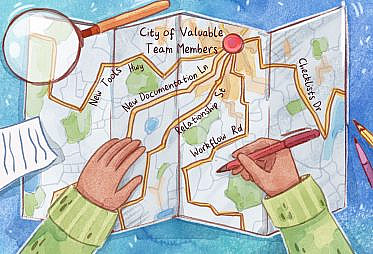

Successful data products help our collaborators decide how to improve the company’s health. To do so, before any development starts, we first need to have a shared understanding of the context around: why something needs to happen (e.g., our objective), what specifically needs

…